6 Cloud Cost Optimisation Issues To Avoid In 2022

February 10, 2020

With the start of a New Year, people tend to make resolutions like eating more veg, exercising more often, or cutting down on cloud costs.

The latter makes the list as the top initiative for companies for the fifth year in a row. According to the 2021 State of the Cloud Report, over 60% of organizations planned to optimize their cloud costs.

So what goes wrong, and why do so many engineering teams struggle with cloud optimization?

Read on to discover the most common issues that make us waste cloud resources, time, and money, and find out proven solutions to them.

In this guide, you will find:

Why is cloud cost optimization so challenging?

The 6 top cloud cost optimization problems to fix in 2022

- Still getting lured by savings plans

- Falling into the trap of overprovisioning

- Getting haunted by orphaned cloud resources

- Not managing drops and spikes in demand

- Not tapping into the opportunity of spot instances

- Delaying the adoption of automated cloud optimization

Why is cloud cost optimization so challenging?

The pay-per-use model of the public cloud has brought more freedom to engineering teams, but this freedom comes at a price. Surprisingly hefty at times, as cloud bill stories from companies like Pinterest demonstrate.

Research proves that most organizations struggle to handle their growing cloud expenses. A typical public cloud spend exceeded budget by an average of 24%.

Cloud providers aren’t exactly helping to reduce costs either. Merely deciphering a cloud bill can be daunting. It can be so intimidating that some teams choose to leave their cloud bill to surprise them at the end of the month.

#1: Still getting lured by reservations and savings plans

The first thing that comes to mind when thinking of saving on the cloud is to pay less for the services your team uses. Companies choose savings plans or reservations because they come with substantial discounts compared to the on-demand pricing model.

It sounds great to pay upfront for a seemingly predictable cloud spend.

But if you look closely, you’ll see that rather than solving the problem, you’re getting a discount on the issue and committing to it for another few years.

So where’s the catch?

Do you remember how Pinterest had committed to $170 million worth of AWS services in advance but then had to splash out an additional $20 million for extra resources?

Their story helps to illustrate the fact that knowing how much capacity you’ll need in one to three years from now is a tall order.

By committing to one provider for so long, you lose flexibility, get locked in, and may have to pay a hefty price for changing requirements.

How to deal with savings plans?

Well, the best solution is to avoid savings plans altogether!

Don’t purchase resources in advance, but consider approaches that solve the cloud spend like:

- Rightsizing

- Autoscaling

- Bin packing

- Resource scheduling.

#2: Falling into the trap of overprovisioning

Overprovisioning takes place when your team chooses resources larger than what you actually need to run your workloads. There is a mindset of safety behind this, as no one wants disruptions in the operation of their apps.

In some business setups, teams are used to getting more resources than their workloads need ‘just in case’. Although performance-wise this approach can make perfect sense to engineers, it takes its toll on cloud waste and costs.

What’s wrong with overprovisioning?

The simplest answer is that it leads to cloud waste and unnecessary expenses that can spiral out of control.

Making overprovisioning a habit in your team is a bad idea in the long run. If you get used to selecting an instance bigger than what your workload needs just to stay on the safe side, think how this plays out as your company and apps grow. You’ll be in for a bill that will cost you an arm and a leg.

Wouldn’t it be better to spend this money on something that matters? For instance, on tackling the climate crisis–a problem to which, by the way, overprovisioning is adding to.

How to deal with overprovisioning?

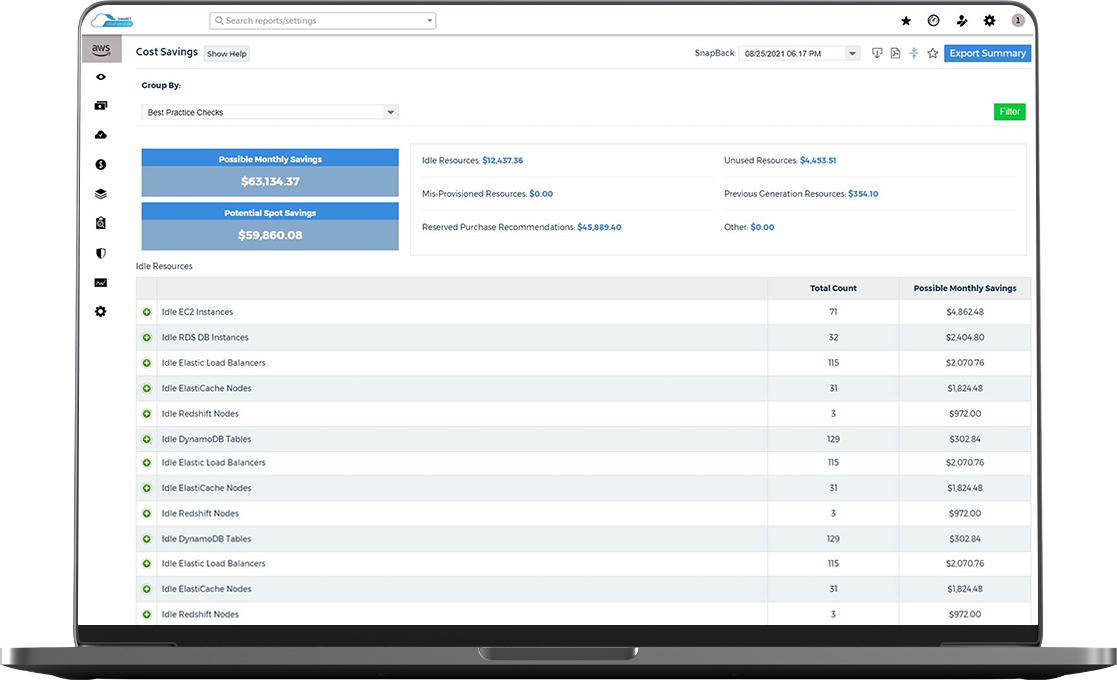

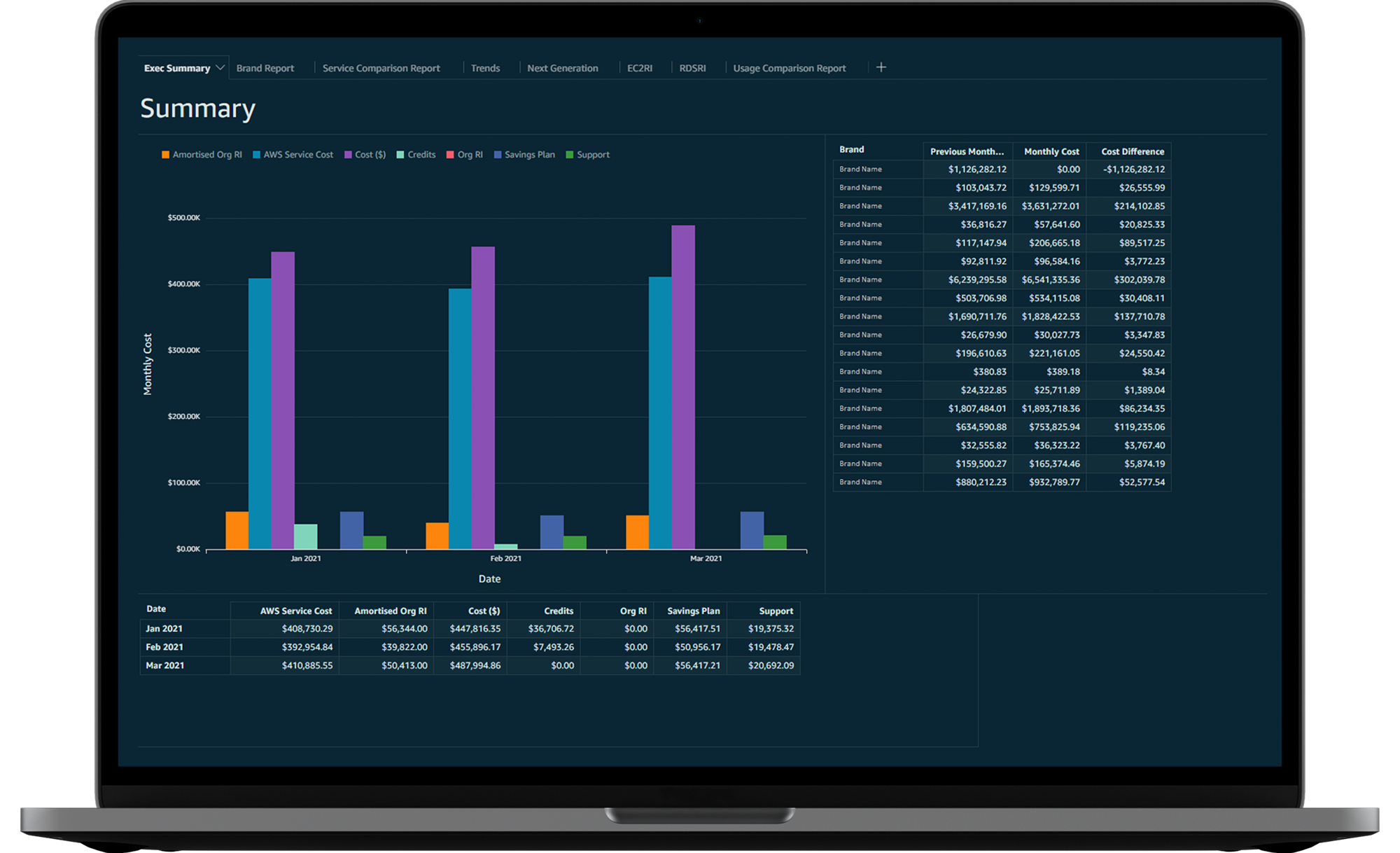

transACT’s Cloud Management Portal (TCMP) is the answer. TCMP is a powerful cloud insights solution that provides 360 visibility and reporting into organisations multi-cloud and hybrid cloud environments; enabling teams to manage cost, security, and compliance at scale.

TCMP’s powerful cloud insights lead to faster decision making, distributed cost savings, and unified reporting. TCMP is the only cloud insights solution with the flexibility to meet business requirements at scale.

- Easy integration – a robust and complete API allows technology teams to build integrations with other enterprise IT systems and applications, to easily include cloud insights into their own business processes.

- Industry leading insights – built on a responsive interface and an API-first methodology, TCMP can be used access data on any desktop or mobile device allowing distributed teams to access without restrictions.

- Enterprise-ready – manage complex, multi-cloud configurations across any combination of business units, user types, and cloud accounts.

#3: Getting haunted by orphaned cloud resources

It’s easy to spin up an instance for a project and then forget to shut it down.

As a result, many teams struggle with orphaned instances that have no ownership but still continue to generate costs.

That’s the type of issue you certainly want to avoid.

This problem is particularly acute in large organizations with numerous initiatives taking place simultaneously, with no centralized resource visibility.

Initiatives managed outside of and without the knowledge of the IT department (shadow IT) can account for 40% of all IT spending at a company. In addition, research shows that shadow cloud usages can be 10x the size of known cloud usage.

What’s wrong with orphaned cloud resources?

Orphaned cloud resources stand for money down the drain with complex sustainability implications.

In short, data centers gobble up a lot of electricity and hardware, contributing significantly to the carbon footprint of the ICT industry. The amount of energy they require doubles every four years, and every new region opening from providers like AWS or Azure contributes to this issue.

That’s why reducing cloud waste is key to halting unnecessary spending and related carbon footprint.

How to deal with this challenge?

Ensuring that you only run the resources you truly need may be challenging, especially in large organizations.

But how do you identify and retire unused instances? That’s where automation comes to the rescue again.

Automated cloud optimization solutions such as transACT’s Cloud Management Portal (TCMP) can constantly scan your usage for inefficiencies and compact resources whenever possible.

Additionally, transACT provides FaaS as a premium add-on service to our cloud management portal (TCMP). Designed to provide access and analyse public cloud costs such as AWS to help enterprises better plan, budget, and forecast future spending.

TCMP FaaS empowers collaboration between technology stakeholders, business and finance teams by automating cloud management’s financial complexity. transACT FaaS can help your organisations bridge any skill gap with our FinOps and R&D expertise in-house so you don’t need to develop and manage the service.

#4: Managing drops and spikes in demand inefficiently

Engineers building e-commerce infrastructure are well aware of how fast things can change. For example, a single influencer mention can mean millions in new sales or a website that goes down from the surge in traffic.

Most other applications also experience changes in usage over time, but striking a balance between expenses and performance remains an ongoing struggle.

What’s wrong with this?

Traffic spikes can generate a massive and unforeseen cloud bill if you leave your tab open or cause your app to crash if you put strict limits over its resources.

When demand is low, you run the risk of overpaying. And when demand is high, the service you offer to customers may be poor.

Yes, there are cloud cost management solutions monitoring your usage, alerting you in real-time if it exceeds set levels or if there are any anomalies. Such tools can provide you with useful recommendations on adjusting your cloud resources to your current demand.

However, scaling your cloud capacity manually is difficult and time-consuming.

Apart from keeping track of everything that happens in the system, you usually need to take care of:

- Gracefully handling traffic spikes and drops – and scaling resources up and down for each virtual machine across all services you use;

- Ensuring that changes applied to one workload don’t cause any problems in other workloads;

- Configuring and managing resource groups on your own to guarantee that they contain resources suitable for your workloads.

How to fix this issue?

That’s another area where cloud automation can come into play and help you save big on time and money.

Autoscaling can automatically handle all the tasks listed above and keep cloud costs at bay. If you use the container orchestrator Kubernetes, you can benefit from three built-in mechanisms for that.

- Horizontal Pod Autoscaler (HPA) adds or removes pod replicas to match your app’s changing usage. It monitors your application to understand if the number of their replicas should change and calculates if removing or adding them would bring the current value closer to the target.

- Vertical Pod Autoscaler (VPA) increases and reduces CPU and memory resource requests to better align your allocated cluster resources with actual usage.

- Cluster Autoscaler alters the number of nodes in a cluster on supported platforms. If it identifies a node with pods that can be rescheduled to other nodes in the cluster, it evicts them and removes the spare node.

Utilizing native AWS features, you only need to define your horizontal and vertical autoscaling policies, and the autonomous optimization tool will handle the rest for you.

#5: Not tapping into the opportunity of spot instances

Cloud service providers sell their unused capacity at massively reduced prices, especially if you compare the cost with their regular on-demand offer.

In AWS, spot instances are available at up to a 90% discount.

What’s tricky about spot instances?

Since you bid on spare computing resources, you never know how long these capacities will stay available. There are spot instances that come with a predefined duration; for example, AWS offers a type that gives you an uninterrupted time guarantee of up to 6 hours.

But other than that, providers can reclaim the spot instance you’re using, giving you as short of notice as 30 seconds to 2 minutes.

That’s not enough time for a human to react. Creating a new VM also takes more time than that, so you’re looking at a risk of potential downtime.

That’s why if you decide to use spot instances, you need to accept the fact that interruptions are bound to occur. They clearly aren’t the right choice for workloads that are critical or can’t tolerate them.

How to deal with this challenge?

Despite risks, spot instances work great for services that are stateless and can be scaled out, i.e. have more than one replica. Luckily, most services are stateless in modern architectures, as Kubernetes was designed for this type of setup.

Here’s what the process of working with spot instances can look like:

- You need to qualify your workloads and how well it handles interruptions.

- Then you should examine the instances your vendor has on offer and pick the ones that will work best for your needs. A rule of thumb is to choose less popular instances and check their interruption frequency.

- Now it’s time to set the maximum bid strategically to avoid potential interruption if the price goes up.

- You may also want to consider managing spot instances in groups and request multiple types to increase your chances of getting them filled.

You can complete these steps manually, but to make it all work, prepare for a large number of configuration, setup, and maintenance tasks.

#6: Delaying the adoption of automated cloud optimization

If you’ve embraced cloud-native technologies, you’re running Kubernetes, maybe even using modern DevOps approaches – automating this part of your infrastructure is definitely a great idea.

Listed as one of Deloitte’s top trends for 2021 and beyond, cloud automation brings tangible results for IT teams, especially in large enterprise environments.

First of all, it reduces the manual efforts you need to put into configuring virtual machines, creating clusters, or choosing the right resources, and more. This change saves time and frees your engineers to focus on more important tasks, innovating, and using your cloud infrastructure to the fullest.

What is more, automation tools allow for more frequent updates, which are key to the idea of continuous deployment. It also reduces the odds of human-made errors, lowers infrastructure costs, improves the security and resilience of your systems, and enhances backup processes.

Finally, automation lets you gain the visibility of resources in use across the company that would otherwise be difficult to control.

In a nutshell, cloud automation is already becoming the new normal in the tech industry.

What’s wrong with delaying cloud automation?

So if cloud automation brings that many indisputable advantages and seems unavoidable, why aren’t all enterprises jumping at it?

Automation can pose numerous challenges, from the resistance to new solutions to fears that they may cost too much to implement and the need to update existing processes.

As in most digital transformation projects, the key to success lies in people and encouraging change on a human level. And when it comes to the workplace, McKinsey’s research proves that fears about being replaced by technology are rife among workers.

However, the advantages automation presents far outweigh the risks. This was evident, for example, in the 2021 State of DevOps Report, where 97% of the surveyed companies agreed that automation improved the quality of their work.

Delaying cloud automation in 2022 equals missing out on benefits like:

- Choosing the most efficient instance types and sizes for your apps;

- Autoscaling cloud resources to handle spikes and drops in demand;

- Eliminating resources that aren’t being used to cut costs;

- Optimizing spot instances by managing potential interruptions;

- Reducing unnecessary expenses in other areas, such as storage, backups, security, configuration changes, and more – all of that in real-time, and at a fraction of the cost you’d have to spend on manual implementation.

How to fix this challenge?

The problem of overcoming human resistance to change and new solutions is as old as the hills.

Reminding your team that automated cloud optimization will liberate them from the burden of repetitive tasks may not suffice.