Getting Ahead of Analytics Cost Creep:

Proactively Identifying Opportunities

By Tayyab Shahzada, Data Engineer

When it comes to Big Data, there are often two speeds of analytics: Near Real Time (NRT) or slower reporting and dashboarding approach, which aggregate on a variety of time ranges. When building NRT systems, it is considered highly important to have the data be processed in as little time as possible; in these systems the freshness of the data is secondary only to data correctness. Therefore, analysts and developers will pursue every optimisation they can down to the millisecond to ensure timely analytics and data delivery.

We will focus instead on the need to optimise those ‘slow’ processes. These are typically reports that aggregate data or retrain an ML model on some predefined schedule, ranging anywhere from hourly to monthly. Anything with a cadence of daily or less frequent is often optimised until it is ‘good enough’ to to meet the deliverable consistently.

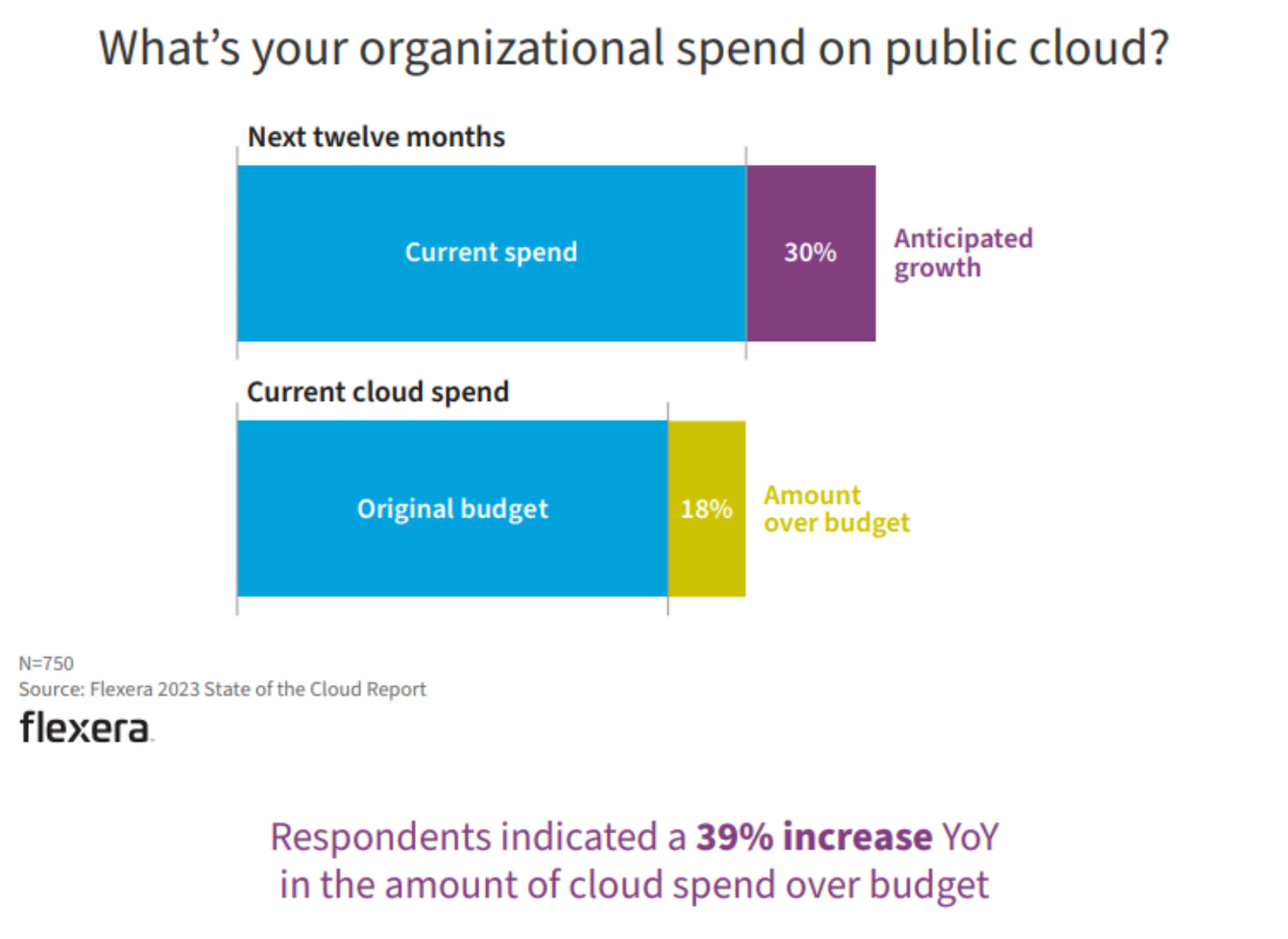

This, alongside the fact that these processes tend to involve several memory-intensive steps, often means that it can be very easy to be wasteful. While historically cloud had been considered the bastion of cost saving and efficiency, particularly S3 for storage and EC2 for compute, in more recent years concern about organisational spend on cloud is on the rise. It is therefore increasingly more important to optimise well and often instead of allowing tech debt to build or settling for ‘good enough’, as both data access and compute costs can quickly add up. The diagram below shows self-reported cloud spend, showing orgs are already over budget and expecting spend to continue increasing.

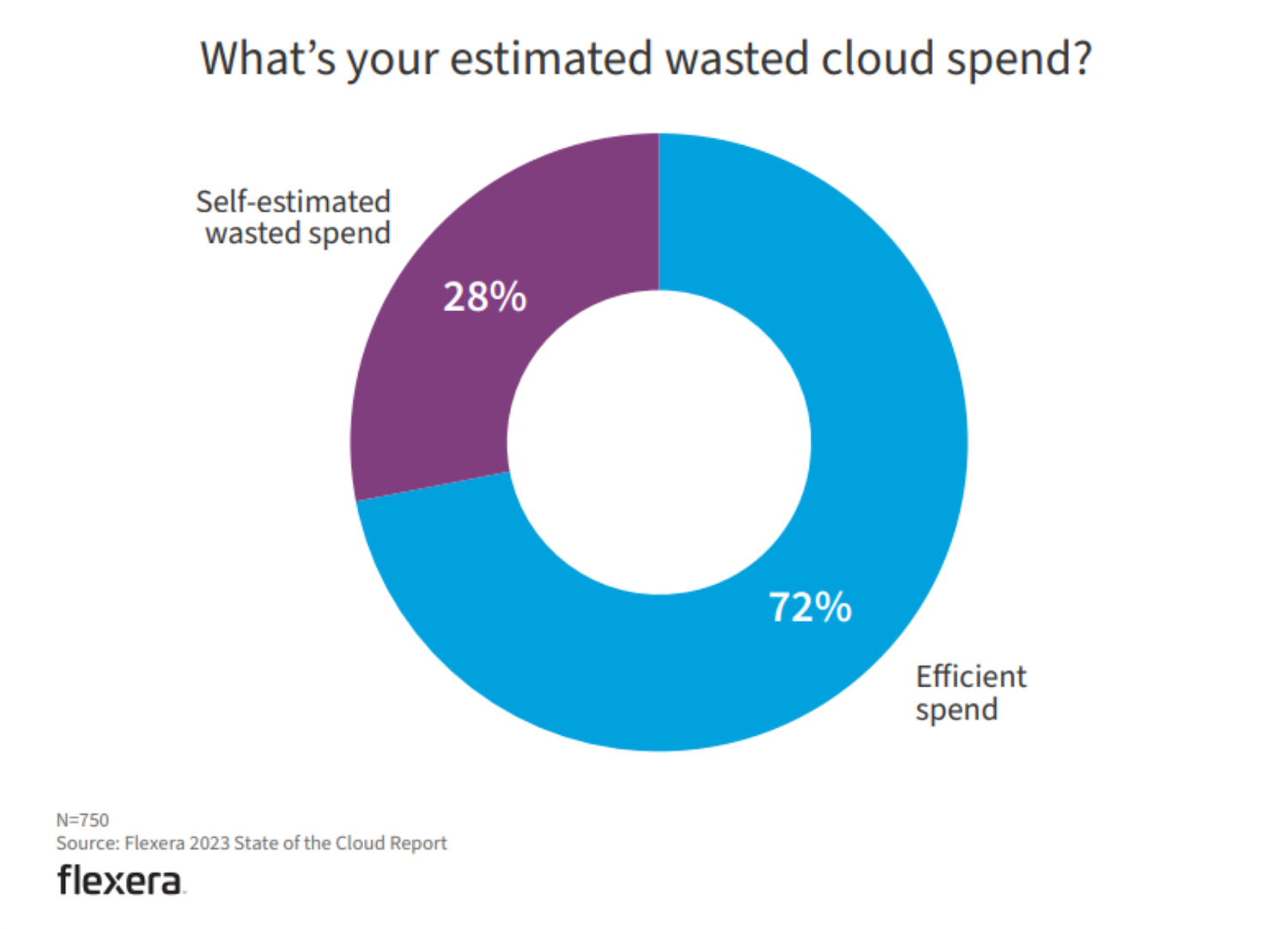

Often, these processes are quickly put together with the intention of optimising when needed; this typically leads to situations where instead of making an easy early optimisation, the code is reused and functionality expanded before optimising, leading to wasted compute and ballooned cloud costs. This becomes a greater issue for teams that follow good re-usability practices with their code bases. As shown in the diagram below from flexera, organisations are aware there is a significant amount of wastage in cloud spend.

As an example from my own prior experience, a process built to query and load data from Athena and S3, a small part of the process was identified as being badly optimised carrying out redundant functionality. Optimising reduced the data load time from over 30 minutes down to under 5 minutes. When the code was written, the total runtime meant this was a small optimisation. The overall end-to-end process took hours for a small report and days for a large one.

After multiple rounds of optimisation and parallelising using AWS batch to reduce end-to-end time and Spot instances to reduce cost, this small piece of un-optimised code had now been copied across multiple parallel processes running on multiple EC2s. It was missed as the original developer had moved on, and further optimisations were being made with new developers overlooking that part of the process. Around a year after the parallelisation, the reports had also grown significantly bigger. Now, the number of parallel tasks to be completed scaled with the size of the report instead of them taking prohibitively longer, and the memory requirements increased, so bigger, more expensive EC2s were being used.

Some reports had grown to a size where thousands of parallel tasks were being run, and this also meant that each of these tasks needed to load the data, running the unoptimised code and wasting up to 30 minutes of compute per task. Costing an estimated 60,000 minutes of compute for one of these larger reports. If these reports ran on dedicated on-prem machines, this would have only mattered if it were affecting the number of reports run or had led to purchasing more resources to account for higher workloads. However, on cloud, this amounts to a direct monetary loss as minutes used are minutes billed.

What had started as a small piece of unoptimised code was now costing the company up to a few thousand dollars in cost per report, with enough reports being run on a regular basis that from the time the original parallelisation had occurred to when this piece of code was optimised the business had occurred up to 6 figures in wasted compute cost.

Wasted compute time doesn’t just impact costs, it also affects sustainability goals and carbon emissions. By cutting wasted compute time, you are not only saving money but less compute time used equates to less energy consumed and less carbon output. Adding to the importance of not just utilising things like spot fleets to cut costs, but to also try and regularly make speed optimisations.

This experience really highlighted to me the importance of optimising early, thoroughly, and often. The initial optimisation to parallelise the process yielded a process that got the reports into the right hands fast enough. It also cut down costs by using spot instances from the originally sequential version of the process; once these two goals had been achieved, it was deemed the optimisations were ‘good enough’. It wasn’t until months later, when the organisation decided that cloud costs had grown too high and a new developer stumbled into this sub-optimal process, that it was fixed. By that point though, it had cost the company a considerable sum in wasted money and had led to currently not tracked amounts of carbon emissions.

Some areas where optimisations can be made include:

- Code Optimisations

Parallelise batch jobs, refactor inefficient algorithms, optimise data processing pipelines. - Caching

Implement caching at query/data levels to avoid redundant computation. - Data Access Optimisations

Compress/aggregate data, optimise data transfers between storage/processing. - Compute Optimisations

Right-size instances, utilise spot/reserved instances, auto-scale infrastructure. Shorter running code is better for spot. - Model Optimisation

Tune hyperparameters, feature engineering, model compression techniques. - Procedural Optimisations

Review new dedicated analytics services that could replace bespoke but common ETL processes. Amazon regularly launches new highly optimised analytics services.

Some metrics that can be tracked and process implemented to help identify inefficiencies and optimise include:

- Setting baseline metrics after initial deployment – query response times, job run times, data volumes.

- Track metric changes over time to spot degradation or scale inefficiencies.

- Conduct regular code/infrastructure reviews to identify optimisation opportunities.

- Track optimisation work – document initiatives, quantify impacts to benchmark effectiveness.

- Set optimisation KPIs – goals for query improvements, cost reductions, carbon footprint targets.

- Conduct retrospective analyses after optimisations to learn lessons.

- Capture optimisation ideas in a backlog for ongoing prioritisation.