Migrating from On-Premises Microsoft SQL Server to AWS Serverless for Business Intelligence and Application Data

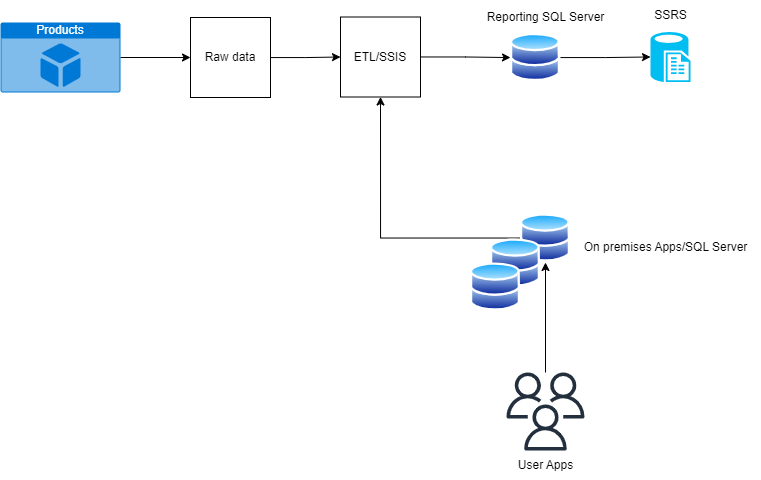

Old Architecture (On-Premises)

The legacy on-premises architecture consisted of two main data pipelines:

First, a Python script scheduled via Windows Task Scheduler would download daily worldwide sales and product data flat files from the corporate intranet to a Windows server in our European subsidiary.

Next, SQL Server Integration Services (SSIS) ETL packages would ingest these flat files, transforming and loading the worldwide data into a European SQL Server staging database. This fed both the sales website product catalog and CRM system. Further ETL jobs delivered aggregated sales data to downstream reporting databases.

Finally, internal staff accessed business intelligence reports built on SQL Server Reporting Services sourcing data from the reporting databases.

Over time, this architecture exhibited several drawbacks:

Scalability and performance degraded as data volumes grew exponentially.

Reliance on external consultants for specialized maintenance and troubleshooting.

Manual effort required for security patching and upgrades.

Increasing infrastructure and licensing costs.

This motivated our initiative to re-architect on AWS leveraging serverless technologies.

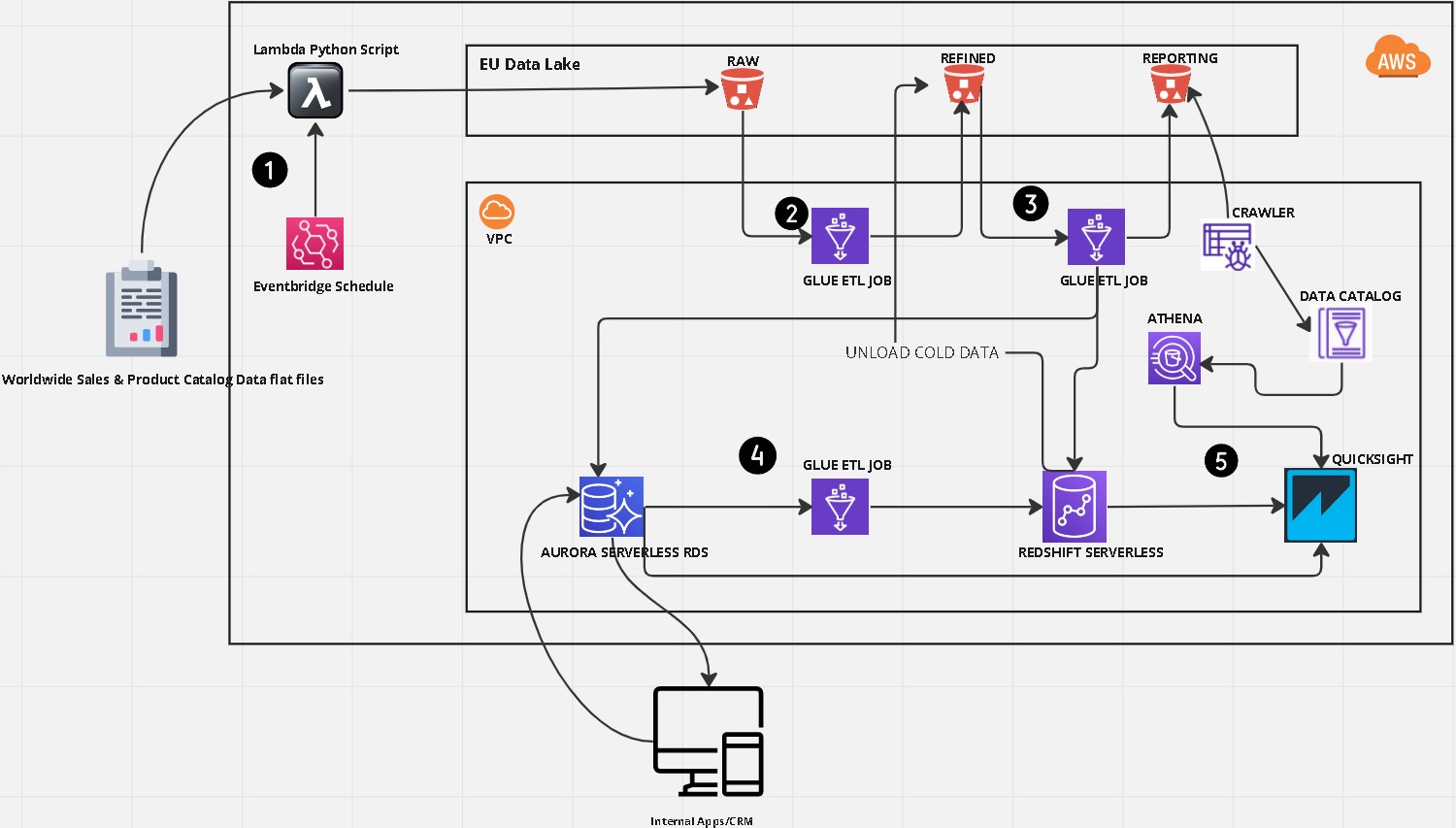

New Architecture (AWS Serverless)

To address the drawbacks of the legacy on-premises architecture, we embarked on an initiative to re-platform the entire data landscape on AWS leveraging serverless technologies.

We took an iterative approach – first building a proof of concept (PoC) focused on a subset of the data pipeline. The success of the PoC provided a compelling case to management for full adoption of the new serverless architecture on AWS.

Through leveraging AWS documentation and best practices, we gradually developed the complete new target architecture over time. Key components included:

- S3 for raw, refined, and reporting data

- Lambda functions orchestrated by EventBridge for automation

- Glue ETL jobs for data transformation

- Aurora Serverless for operational database

- Redshift Serverless for analytical database

- QuickSight for reporting and dashboards

This new serverless architecture on AWS addressed the limitations of the legacy on-premises environment and set the stage for enhanced scalability, reduced costs, and improved performance and security. The gradual migration approach enabled us to incrementally build expertise while minimizing risk.

- Lambda Functions and EventBridge

Python Lambda functions are configured to fetch raw data files from the corporate intranet SFTP server into the S3 raw bucket

EventBridge scheduled rules trigger the Lambda functions to run daily at specified intervals

Additional error handling Lambda functions implemented to check for failures and retry - AWS Glue ETL Jobs

Glue crawlers used to infer schema and populate metadata in Glue Data Catalog

ETL jobs written in Python perform transformations like selecting specific columns, parsing dates, handling nulls

Jobs optimized with features like data framing, partitioning, compression

Parameterization used for easier maintenance like table names

Jobs orchestrated into sequences with job bookmarking to enable continuity - Aurora and Redshift Tables

Aurora database is 10 node cluster of db.r5.large instances with auto pause enabled

Aurora rows have TTL property to automatically delete older data

Redshift is ra3.4xlarge 3 node cluster with automatic snapshots and scaling

Late binding views created on Aurora and Redshift to abstract underlying tables - Infrastructure as Code

CloudFormation used to manage infrastructure for Lambda, Glue, Aurora, Redshift

Terraform configurations applied for foundational networking, IAM, S3

Git repository with version control for all configuration code

Database Migration:

To migrate the original SQL Server databases to the new AWS architecture, we utilized AWS Database Migration Service (DMS) and the Schema Conversion Tool.

DMS enabled us to replicate data from the source SQL Server instances into the target Aurora and Redshift databases with minimal downtime. Key capabilities like data validation and ongoing replication facilitated a smooth transition.

Previously, SQL Server served for both transactional and analytical workloads. With the new architecture, we implemented separate purpose-built databases for OLTP (Aurora) and OLAP (Redshift) to optimize performance, scalability, and costs.

Leveraging DMS and its integration with other AWS services, we were able to successfully migrate our databases from on-premises SQL Server to the new serverless paradigm on AWS.

Gained after moving to AWS

Migrating to AWS enabled several benefits and best practices including:

Improved cost transparency and scalability from pay-per-use services. We optimized spend by monitoring usage and implementing auto-scaling policies.

Performance gains by offloading read traffic to multi-AZ read replicas for Aurora and Redshift.

Automated database backups and simplified replication through native AWS services.

Infrastructure as code adoption using Terraform for provisioning resources and version control.

Reduced administrative overhead by leveraging AWS serverless services like Lambda and Glue.

Storage and compute managed by AWS, eliminating maintenance of on-premises hardware.

By moving to a modern serverless architecture on AWS leveraging database services purpose-built for transactional and analytical workloads, we were able to resolve scaling challenges and reduce costs while increasing performance, resilience, and development velocity.

Migrating our business intelligence and application data infrastructure to a serverless architecture on AWS delivered tremendous benefits for our organization. It enabled us to cost-effectively scale while improving performance, reducing maintenance burdens, and leveraging purpose-built database services.

This migration represented one of the best technology investments our company has made. We are now actively exploring additional AWS services like EMR and SageMaker to further capitalize on our data. Initiatives are underway applying machine learning and deeper analytics to derive enhanced business insights.

We hope this post summarizing our migration experience from on-premises SQL Server to AWS serverless helps provide valuable lessons learned for others undertaking similar modernization journeys.